Building a strong community culture is incredibly important for the long-term success of any community. Without a great culture, a community can start to scare off new members, harm existing members, and damage your brand. But “culture” is one of those things that seems so touchy-feely that it’s easy to wonder if you have any control over it.

Thankfully, over my 15+ years working with communities at companies like HubSpot and Reddit, I’ve uncovered some of the core building blocks of a strong community culture.

A Strong Cultural Vision

You can’t have a strong culture if you don’t know what you want it to be! Taking the time to think about what vibe you want for your community – and what vibe will allow you to please both members and business – is worthwhile. Are you creating a raucous nightclub or a quiet library? A messy fun run or a professional race? Writing this out will give you a north star to execute against.

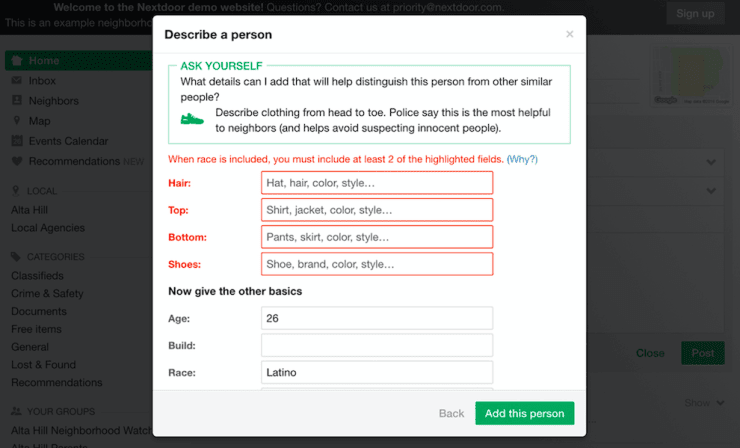

Clear Guidelines

Correspondingly, it’s hard to enforce a culture if members don’t know what it’s supposed to be. Capturing the dos and don’ts of your community in a formal document will help guide your members and give you something to point to when you have to hand out punishments. The Coral Project has a great guide to building a code of conduct.

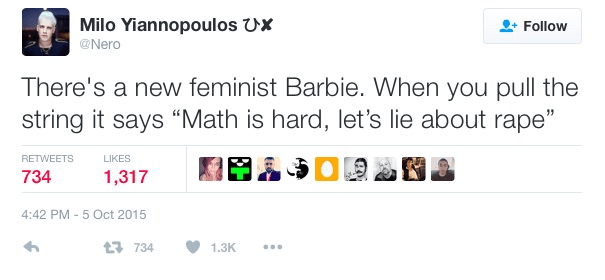

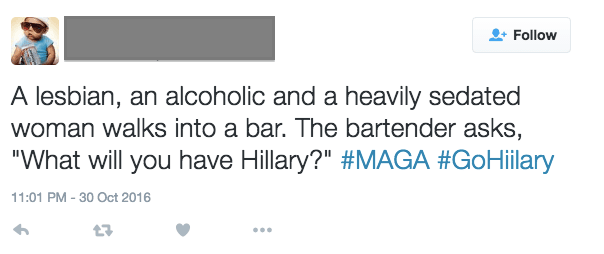

I recommend making your rules specific enough that they’re clear without having them be so targeted (“you can’t say words x, y, and z”) that you have to constantly amend them to factor in new, creative trolls. (And let me tell you, from 5 years at Reddit: they will always come up with new ways to be nasty.) And don’t forget to list them prominently – research has shown that this can decrease problematic posts.

What punishments you assign to transgressions will depend on your community and the level of transgression. In some cases you may want to consider a 3-strikes rule; in others, you may have zero tolerance.

Great Founding Members

The founding members of your community are going to set the vibe. Whatever content they post will be what the larger member base sees when they join. These are the village elders that newbies will look up to. So you absolutely must carefully screen your founding members to ensure that they both embody your cultural vision and that they’re committed to helping you deliver on it.

Staff That Model Behavior

Just as your members will look up to your founding members, they’ll look up to you and your staff. The moment that you break cultural norms, everyone will think it’s ok. Ensure that your team knows the rules and vibe and diligently stick to them.

Positive Reinforcement

Studies show that positive reinforcement tends to be more effective than negative reinforcement. Shout-out the members you see doing an amazing job living up to your values. Consider awards or surprise-and-delight budgets for these folks. But also consider positive reinforcement for problem children – if you rain praise down on someone when they make the right choice, they may lean away from all the wrong choices they had been making prior.

Consistency of Punishment

Even if it’s not as effective as positive reinforcement, we do need to enforce our rules. Importantly, consistency of punishment is shown to be more effective than severity of punishment; it’s hard to prove any decrease in crime from the death penalty, whereas studies have shown that hard-to-avoid DUI checkpoints are quite effective.

This means making it easy for members to report transgressions, setting up automation to catch troublemakers, and enforcing the same way every time. Even if someone has traditionally been a good member of the community, you have to treat them the same as everyone else.

Evolution of Culture as Necessary – WITH Your Community

Communities are living, breathing, evolving entities, and it’s rare that their culture will remain stagnant or a ruleset will cover all situations until the end of time. You will likely need to evolve your guidelines over time. To do this successfully, consider involving your community in the discussion and be default transparent about the changes.

The Secret Weapon

What makes a strong community culture can’t truly fit into a blog post; it’s the day-to-day work of community professionals nurturing, supporting, and enforcing in their communities. If your business hasn’t hired a community professional, that’s my cheat code for you: hire someone who is great at this.

“Rules” photo by Joshua Miranda

Cars photo by Michael Pointner